Verification-First QA

Most AI QA catches errors after generation. Verification-first aims to ground reviews in external facts - here's how to implement it and its limitations.

What if you could reduce AI hallucinations before they embed in your content, instead of hunting for them afterward?

Most AI quality assurance follows a predictable pattern: generate content, then review it for errors. The challenge? When AI reviews AI, both models share a fundamental limitation: they don't know what they don't know.

This post explains the verification-first pattern - an approach that aims to address knowledge cutoff limitations by grounding model review in externally verified facts. Like any methodology, it has trade-offs worth understanding.

The Challenge: AI Reviewing AI

When you ask an AI to review AI-generated content, you're asking a model with a knowledge cutoff to validate claims that may reference events after that cutoff. Research from OpenAI explains one reason this is difficult: standard training and evaluation procedures reward guessing over acknowledging uncertainty.

The result? Models sometimes confidently assert things they cannot verify.

Real-World Costs

A case study from Shift Asia describes scenarios where teams used AI-generated code with outdated practices that passed initial tests but failed later audits. While the specific causes vary (training data staleness, knowledge cutoff, or both), the pattern illustrates why verification matters.

According to research on knowledge cutoff complexity, a simple cutoff metric oversimplifies how LLMs actually work. Temporal biases in training data and deduplication schemes create inconsistent knowledge across different resources within the same model.

The Conventional Flow

1. Generate content with AI

2. Have AI review content (same knowledge cutoff)

3. Human catches remaining issues (resource-intensive)

The limitation: AI reviewing AI may share similar blind spots, though different models may catch different issues.

The Approach: Verification-First

Google Research published findings suggesting it can be beneficial to "add a sufficiency check before generation" in RAG systems. While that research focused specifically on retrieval contexts, the underlying principle - verifying that you have accurate information before proceeding - can inform QA workflows more broadly.

The Pattern

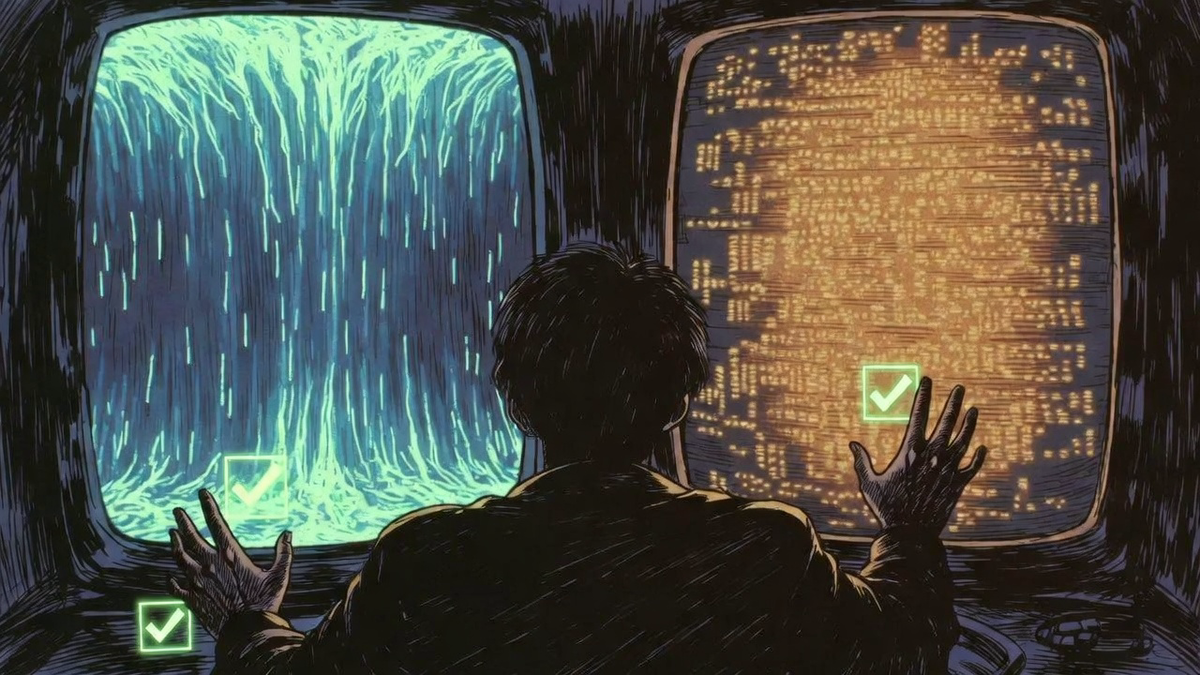

Phase 0: VERIFICATION (external tools, APIs, real-time data)

Phase 1: Model Review (with verified context as reference)

Phase 2: Cross-Validation (models focus on reasoning, not facts)

The idea: external verification can provide more current information than model training data, helping ground subsequent review steps.

Supporting Evidence

Research indicates that grounding responses in verified documents can meaningfully reduce hallucinations, with some implementations reporting significant improvements. The most robust systems add span-level verification - checking individual claims against evidence rather than reviewing content holistically.

HaluGate, a token-level hallucination detection pipeline, reports high validation accuracy with low inference latency, catching unsupported claims during the generation process itself.

A TechTarget article on fact-checking AI content notes that AI alone often struggles with accuracy in fact-checking scenarios, reinforcing the value of external verification sources.

Important Limitations

Verification-first isn't a complete solution:

- External tools have their own limitations: WebSearch results may be outdated, APIs have rate limits, and not all facts are externally verifiable (proprietary data, emerging events not yet indexed)

- Verification overhead: Additional API calls add latency and cost

- Tool failures: Systems need fallback strategies when verification tools error or return incomplete results

- Reasoning vs. facts: This approach primarily addresses factual accuracy, not reasoning errors (models can still misapply verified facts)

Multi-Model Validation: Complementary Strengths

Verification-first doesn't mean abandoning model review. It means giving models tasks that play to their strengths.

Complementary Capabilities

Different models have different characteristics. Claude and similar models tend to excel at structured reasoning and comprehensive analysis. Grok and similar models with real-time access can check facts more readily when tool use is available.

The Dual-Model Pattern

The pattern separates tasks by model characteristic:

| Phase | Approach | Focus |

|---|---|---|

| 0 | External Tools | Verify FACTS (current data) |

| 1 | Structured Model | Validate STRUCTURE (checklists) |

| 2 | Adversarial Model | Challenge LOGIC (reasoning gaps) |

The prompt pattern for the adversarial model:

"These claims have been VERIFIED via [tool]: [list].

Focus on LOGIC, REASONING, STRUCTURE - not

factual accuracy for verified items."

This context helps the model focus its effort, though it doesn't eliminate all reasoning errors.

Cross-model comparison can increase confidence when multiple models agree, while conflicting outputs signal areas needing human review.

Content-Type-Specific Verification

Different content types benefit from different verification sources.

| Content Type | Useful Verification Tools |

|---|---|

| Blog posts | WebSearch, library docs |

| Security reports | NVD, MITRE databases |

| Code reviews | Dependency checkers |

| Compliance docs | Regulatory databases |

For security content, NVIDIA's Agent Morpheus demonstrates connecting to NVD and threat intelligence sources during analysis - providing authoritative reference data for AI-generated assessments.

Implementation Workflow

A practical implementation:

- Extract claims - Identify discrete factual statements in content

- Verify externally - Query relevant APIs/databases for each claim type

- Handle tool failures - Log verification gaps, flag unverified claims

- Ground model review - Pass verified context to reviewing models

- Separate cognitive tasks - Structured review for completeness; adversarial review for reasoning

- Human oversight - Focus attention on disputed items and verification gaps

The Human Role

Verification-first doesn't eliminate human review. It aims to focus human attention on higher-value decisions:

- Resolving conflicts between verification sources

- Evaluating reasoning quality (not just factual accuracy)

- Making judgment calls on ambiguous cases

- Validating areas where automated verification couldn't help

What to Track

According to discussions on AI verification practices, useful metrics include:

- Citation accuracy (percentage of claims with valid supporting sources)

- Retrieval precision (relevance of verification results)

- Verification coverage (what percentage of claims could be verified)

- Human escalation rate (what requires manual review)

The RAG Paradox

There's a counterintuitive finding in the research: RAG improves overall performance but may reduce the model's tendency to abstain when appropriate. Additional context can increase model confidence beyond what's warranted.

One response: add relevance checking before using retrieved context. Don't just retrieve - validate that the retrieval actually addresses the question. This aligns with verification-first thinking: validate your inputs before relying on them.

Summary

Verification-first is an approach to AI QA that:

- Grounds model review in externally verified facts

- Separates factual verification from reasoning review

- Plays to different model strengths

- Aims to focus human effort on high-value decisions

It's not a complete solution to AI reliability - external tools have limitations, reasoning errors persist, and implementation adds overhead. But for content where factual accuracy matters, grounding review in verified external data can be a meaningful improvement over models reviewing each other's blind spots.

The pattern applies to various AI content workflows. External APIs exist for many verification needs. And combining verification with multi-model review can catch issues that either approach might miss alone.

The Intelligence Adjacent framework is free and open source. If this helped you, consider joining as a Lurker (free) for methodology guides, or becoming a Contributor ($5/mo) for implementation deep dives and to support continued development.

Sources

AI Hallucination and Detection

- Stop AI Hallucinations: Detection, Prevention & Verification Guide 2025

- Token-Level Truth: Real-Time Hallucination Detection for Production LLMs

- Why Language Models Hallucinate - OpenAI

- Guide to Hallucinations in Large Language Models - Lakera

Knowledge Cutoff and LLM Challenges

- LLM Knowledge Cutoff Dates Complete Guide

- Dated Data: Tracing Knowledge Cutoffs in Large Language Models

- 8 LLM Production Challenges: Problems, Solutions

Multi-Model Verification

- Beyond Ground Truth: LLM Multi-Model Validation

- How to Actually Use Multiple AI Models Together

- Verification and Validation of Systems in Which AI is a Key Element

RAG and Grounding

- Sufficient Context: A New Lens on RAG Systems - Google Research

- Retrieval-Augmented Generation (RAG): 2025 Definitive Guide

- Grounding Overview - Vertex AI

- Groundedness Detection in Azure AI Content Safety

Fact-Checking Workflows

- AI Content Verification Workflow: Step-by-Step Guide

- 6 Steps in Fact-Checking AI-Generated Content - TechTarget

- AI Fact Verification for Reliable Professional Use